Pre-training Protein Language Models with Label-Agnostic Binding Pairs Enhances Performance in Downstream Tasks:

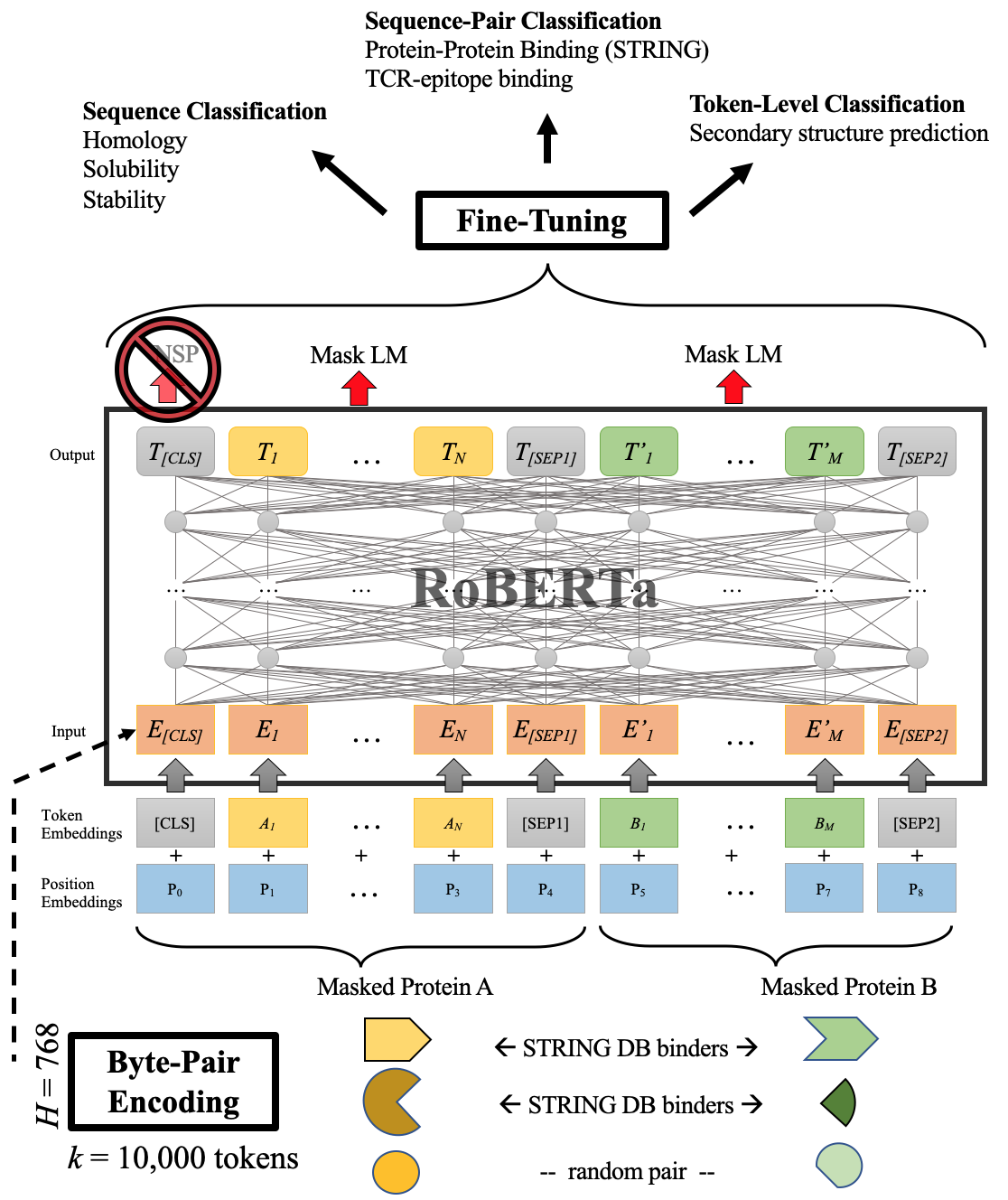

Proposed architecture is pre-trained by a mixture of binding and non-binding protein sequences, using only the MLM objective. Byte-pair encoding with a 10k token vocabulary enables inputting 64% longer protein sequences compared to character level embedding.

Proposed architecture is pre-trained by a mixture of binding and non-binding protein sequences, using only the MLM objective. Byte-pair encoding with a 10k token vocabulary enables inputting 64% longer protein sequences compared to character level embedding. E_i and T_i represent input and contextual embeddings for token i. [CLS] is a special token for classification-task output, while [SEP] separates two non-consecutive sequences.